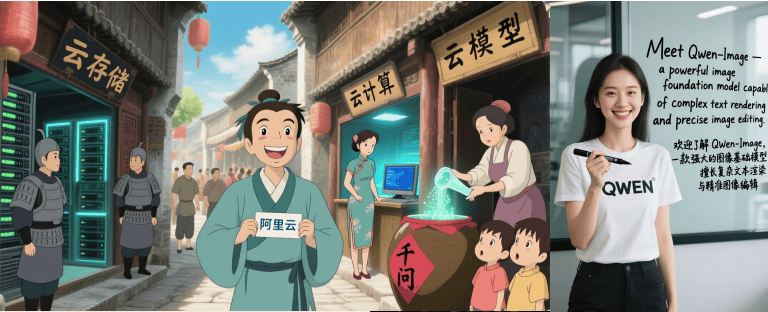

Qwen-Image: Technical Report on Large-Scale Vision–Language Pretraining (2025)

Summary of the Qwen-Image technical report: a large-scale vision–language model (VLM) designed for multimodal understanding, integrating image recognition with text comprehension for state-of-the-art performance.

Home › Research Papers › Vision–Language Models › Qwen-Image

Paper:

Overview

Qwen-Image is a large-scale vision–language model that unifies visual recognition and natural language understanding in a single framework. Developed for multimodal AI, it demonstrates state-of-the-art performance across a wide range of vision–language benchmarks, including image captioning, text-to-image retrieval, and visual question answering (VQA).

Key Contributions

Introduces a scalable VLM architecture trained on large multimodal datasets.

Achieves strong performance on multimodal reasoning tasks such as VQA and captioning.

Demonstrates adaptability to both vision-centric and language-centric downstream tasks.

Provides insights into scaling laws and pretraining dynamics for vision–language models.

Method (high-level)

Qwen-Image employs a dual-encoder architecture combining a vision backbone and a language transformer, trained jointly with large-scale multimodal data. Cross-modal alignment is enforced through contrastive objectives and generative pretraining, enabling the model to learn rich representations that generalize across tasks. This design allows Qwen-Image to handle both understanding tasks (e.g., classification, retrieval) and generation tasks (e.g., captioning).

Results & Applications

Qwen-Image achieves state-of-the-art results across major VLM benchmarks:

Visual Question Answering (VQA): Strong reasoning ability and robustness across datasets.

Image Captioning: Generates coherent and contextually accurate captions.

Cross-Modal Retrieval: Excels in both image-to-text and text-to-image retrieval.

Applications: Multimodal search, content understanding, AI assistants, and educational tools.